Continuous-time stochastic processes with stationary independent increments are known as Lévy processes. In the previous post, it was seen that processes with independent increments are described by three terms — the covariance structure of the Brownian motion component, a drift term, and a measure describing the rate at which jumps occur. Being a special case of independent increments processes, the situation with Lévy processes is similar. However, stationarity of the increments does simplify things a bit. We start with the definition.

Definition 1 (Lévy process) A d-dimensional Lévy process X is a stochastic process taking values in

such that

- independent increments:

is independent of

for any

.

- stationary increments:

has the same distribution as

for any

.

- continuity in probability:

in probability as s tends to t.

More generally, it is possible to define the notion of a Lévy process with respect to a given filtered probability space . In that case, we also require that X is adapted to the filtration and that

is independent of

for all

. In particular, if X is a Lévy process according to definition 1 then it is also a Lévy process with respect to its natural filtration

. Note that slightly different definitions are sometimes used by different authors. It is often required that

is zero and that X has cadlag sample paths. These are minor points and, as will be shown, any process satisfying the definition above will admit a cadlag modification.

The most common example of a Lévy process is Brownian motion, where is normally distributed with zero mean and variance

independently of

. Other examples include Poisson processes, compound Poisson processes, the Cauchy process, gamma processes and the variance gamma process.

For example, the symmetric Cauchy distribution on the real numbers with scale parameter has probability density function p and characteristic function

given by,

| (1) |

From the characteristic function it can be seen that if X and Y are independent Cauchy random variables with scale parameters and

respectively then

is Cauchy with parameter

. We can therefore consistently define a stochastic process

such that

has the symmetric Cauchy distribution with parameter

independent of

, for any

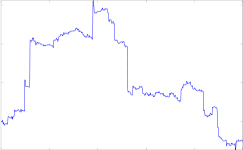

. This is called a Cauchy process, which is a purely discontinuous Lévy process. See Figure 1.

Lévy processes are determined by the triple , where

describes the covariance structure of the Brownian motion component, b is the drift component, and

describes the rate at which jumps occur. The distribution of the process is given by the Lévy-Khintchine formula, equation (3) below.

Theorem 2 (Lévy-Khintchine) Let X be a d-dimensional Lévy process. Then, there is a unique function

such that

(2) for all

and

. Also,

can be written as

(3) where

, b and

are uniquely determined and satisfy the following,

is a positive semidefinite matrix.

.

is a Borel measure on

with

and,

(4) Furthermore,

uniquely determine all finite distributions of the process

.

Conversely, if

is any triple satisfying the three conditions above, then there exists a Lévy process satisfying (2,3).

Proof: This result is a special case of Theorem 1 from the previous post, where it was shown that there is a continuous function ,

such that

and

Using independence and stationarity of the increments of X,

So, and, by continuity in t, this gives

. Taking

gives (2).

Again using Theorem 1 of the previous post, there is a uniquely determined triple such that

| (5) |

Here, is a continuous function from

to

such that

is positive semidefinite for all

. Also,

is a continuous function from

to

and

is a Borel measure on

with

and

Taking ,

and defining

by

it can be seen that (3) follows from (5) with

, and that

satisfy the required conditions. Conversely, if (3) is satisfied, then taking

,

and

gives (5). Then, uniqueness of

implies that

are uniquely determined by (3).

Finally, if satisfy the required conditions, then taking

,

and

, Theorem 1 of the previous post says that there exists an independent increments process satisfying (5). This is then the required Lévy process. ⬜

The measure above is called the Lévy measure of X,

are referred to as the characteristics of X, and it is said to be purely discontinuous if

. Note that a Lévy process with zero Lévy measure

satisfies

, so is a Brownian motion with covariance matrix

and drift

.

As an example, consider the purely discontinuous real-valued Lévy process with characteristics and

. This satisfies (4), so determines a well-defined process. Using the Lévy-Khintchine formula we can compute its characteristic function,

Here, the identity is being used followed by the substitution

. Comparing this with the characteristic function (2) of the Cauchy distribution shows that X is the Cauchy process.

As mentioned above, Lévy processes are often taken to be cadlag by definition. However, Theorem 2 of the previous post states that all independent increments processes which are continuous in probability have a cadlag version.

Theorem 3 Every Lévy process has a cadlag modification.

We can go further than this.

Theorem 4 Every cadlag Lévy process is a semimartingale.

Proof: Theorem 2 of the previous post states that a cadlag Lévy process X decomposes as where Y is a semimartingale and W is a continuous centered Gaussian process with independent increments, hence a martingale. So, W is a semimartingale and so is X. ⬜

The characteristics of a Lévy process fully determine its finite distributions since, by equation (3), they determine the characteristic function of the increments of the process. The following theorem shows how the characteristics relate to the paths of the process and, in particular, the Lévy measure does indeed describe the jumps. This is just a specialization of Theorem 2 of the previous post to the stationary increments case.

Theorem 5 Let X be a cadlag d-dimensional Lévy process with characteristics

. Then,

- The process

(6) is integrable, and

. Furthermore,

is a martingale.

- The quadratic variation of X has continuous part

.

- For any nonnegative measurable

,

In particular, for any measurable

the process

(7) is almost surely infinite for all

whenever

is infinite, otherwise it is a homogeneous Poisson process of rate

. If

are disjoint measurable subsets of

then

are independent processes.

Furthermore, letting

be the predictable sigma-algebra and

be

-measurable such that

and

is integrable (resp. locally integrable) then,

(8) is a martingale (resp. local martingale).

Proof: The first statement follows directly from the first statement of Theorem 2 of the previous post.

Now apply the decomposition from the second statement of Theorem 2 of the previous post, where W has quadratic variation

and Y satisfies

. This gives

as required.

For the third statement above, define the measure on

. By the third statement of Theorem 2 of the previous post,

Also, as stated in Theorem 2 of the previous post, for a measurable , the random variable

is almost surely infinite whenever and Poisson distributed of rate

otherwise. Furthermore,

are independent whenever

are disjoint measurable subsets of

. We can apply this to the process

defined by (7).

If satisfies

then

is infinite for all

, so

is almost surely infinite. On the other hand, if

is finite, consider a sequence of times

. The increments of

are

which are independent and Poisson distributed with rates

. So,

is a homogeneous Poisson process of rate

.

If are disjoint measurable subsets of

, then

are Poisson processes (whenever

) and, by construction, no two can ever jump simultaneously. So, they are independent.

Finally, that (8) is a (local) martingale is given by the final statement of Theorem 2 of the previous post. ⬜

The following characterization of the purely discontinuous Lévy processes is an immediate consequence of the second statement of Theorem 5.

Corollary 6 A cadlag Lévy process X is purely discontinuous if and only if its quadratic variation has zero continuous part,

.

Any Lévy process decomposes uniquely into its continuous and purely discontinuous parts.

Lemma 7 A cadlag Lévy process X decomposes uniquely as

where W is a continuous centered Gaussian process with independent increments,

, and Y is a purely discontinuous Lévy process.

Furthermore, W and Y are independent and if X has characteristics

then W and Y have characteristics

and

respectively.

Proof: Theorem 2 of the previous post says that X decomposes uniquely as where W is a continuous centered Gaussian process with independent increments,

, and Y is a semimartingale with independent increments whose quadratic variation has zero continuous part

. Furthermore, W and

are independent Lévy processes with characteristics

and

respectively.

So, taking gives the required decomposition, satisfying the required properties. Conversely, supposing that

is any other such decomposition, uniqueness of the decomposition

gives

and

. ⬜

Recall that for any independent increments process X which is continuous in probability, the space-time process is Feller. For Lévy processes, where the increments of X are stationary, we can use a very similar proof to show that X itself is a Feller process.

Lemma 8 Let X be a d-dimensional Lévy process. For each

define the transition probability

on

by

for nonnegative measurable

.

Then, X is a Markov process with Feller transition function

.

Proof: To show that defines a Markov transition function, the Chapman-Kolmogorov equations

need to be verified. The stationary independent increments property gives

| (9) |

for times . As the expectation is conditioned on

, we can replace x by any

-measurable random variable. In particular,

This gives

as required. So, defines a Markov transition function. Replacing x by

in (9) gives

so X is Markov with transition function .

It only remains needs to be shown that is Feller. That is, for

,

and

as

. Letting

tend to a limit

, bounded convergence gives

as . So,

is continuous. Similarly, if

then

tends to zero, giving

. So,

is in

.

Finally, if is a sequence of times tending to zero then

in probability, giving

as required. ⬜

Finally, we can calculate the infinitesimal generator of a Lévy process in terms of its characteristics.

Theorem 9 Let X be a d-dimensional Lévy process with characteristics

and define the operator A on the bounded and twice continuously differentiable functions

from

to

as

(10) Then,

is a local martingale for all

.

In equation (10) the summation convention is being used, so that if i or j appears twice in a single term then it is summed over the range .

Proof: Apply the generalized Ito formula to ,

| (11) |

Now define the -measurable function g by

and let be the local martingale defined as in (8). Also, define Y by (6). Then, using the identity

, equation (11) can be rewritten as

As is a martingale, this shows that M is a local martingale. ⬜

In particular, if f is in the space of twice continuously differentiable functions vanishing at infinity and

then Theorem 9 shows that f is in the domain of the generator of the Feller process X, and A is the infinitesimal generator. So,

where convergence is uniform on . For any Lévy process for which the distribution of

is known, this allows us to compute

and, then, read off the Lévy characteristics. In particular, if

is twice continuously differentiable with compact support contained in

then,

Applying this to the Cauchy process, where has probability density function

, gives

So, the Cauchy process has Lévy measure , agreeing with the previous computation.

There is a minor typo I found when following your calculations regarding the characteristic exponent of the Cauchy process.

“Here, the identity eiax + e−iax – 2 = -4sin2(ax) is being used followed by the substitution … ”

The identity is eiax + e−iax – 2 = -4sin2(ax/2) (as you correctly use it subsequently). Thanks for the nice site!

Chris

Fixed. Thanks!

Hello, your posts are very interesting. By the way, I would like to ask you a question as follows:

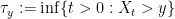

Let X be a Levy processes with no positive jumps and then we have

then we have

Could you explain that why? and does it hold for Levy process with no negative jumps? If X be Hunt process with no positive jumps then does this hold?

Thank you very much!

Hi. Strictly speaking that’s not true. If X0 > y then and

and  . You can only conclude that

. You can only conclude that  if you assume that X0 ≤ 0 or, alternatively, if you restrict to

if you assume that X0 ≤ 0 or, alternatively, if you restrict to  . Then, the conclusion holds for any cadlag process, and is nothing specific to Lévy processes. In fact, you have

. Then, the conclusion holds for any cadlag process, and is nothing specific to Lévy processes. In fact, you have  for any right-continuous process and

for any right-continuous process and  if it has left limits. If it also has no positive jumps then

if it has left limits. If it also has no positive jumps then  .

.

Hallo, I have a question to George Lowther.

Do you know an easy proof of the fact that for two independent Levy processes $X$ and $Y$ the co-variation process $[X,Y]$ is equal to zero? I have a proof of this result but I feel that it is to complicated and I would like to make it shorter. Thank you very much.

Best regards,

Paolo

how can we get the value dv(x)?

What literature did you use in Theorem 5?

A Levy process has independent and stationary increments. In addition, it is assume to have cadlag paths or be continuous in probability (one implies the other, given independent and stationary increments).

If we drop the assumption on continuity in probability, what are we left with? Are there processes with independent and stationary increments that do not have a cadlag modification (i.e. a Levy modification)? I have been struggling to come up with an examples of such processes.

There are examples using the axiom of choice (i.e., assuming ZFC set theory). There are even deterministic examples, where and X is not continuous (https://en.wikipedia.org/wiki/Cauchy's_functional_equation), but these are all non-measurable (see http://math.stackexchange.com/q/318523/1321) and use the axiom of choice in the construction. There are axiomatizations/models of set theory where all subsets of the reals are Lebesgue measurable (e.g., Solovay model) and then I think that the continuity condition in the definition of Levy processes would be superfluous. However, such axiomatizations do not allow the uncountable axiom of choice.

and X is not continuous (https://en.wikipedia.org/wiki/Cauchy's_functional_equation), but these are all non-measurable (see http://math.stackexchange.com/q/318523/1321) and use the axiom of choice in the construction. There are axiomatizations/models of set theory where all subsets of the reals are Lebesgue measurable (e.g., Solovay model) and then I think that the continuity condition in the definition of Levy processes would be superfluous. However, such axiomatizations do not allow the uncountable axiom of choice.

Thanks. Sounds to me that assuming stochastic continuity is then hardly a restriction at all, but rather serves to exclude some pathological cases.

Yes, I agree.

Hello, I have some question need your help: If given a random variable $xi$ with some special distribiution. Can we find a Levy process $X$ such that it admits $\xi$ as its running maximum up to exponential time $T$, i.e., $M_T:=max_{0\leq t\leq T} X_t$ has the same distribution as $\xi$. Where T is exponential distributed.

Thank you!